ED-PMP

Extrinsic Dexterity with Parameterized Manipulation Primitives

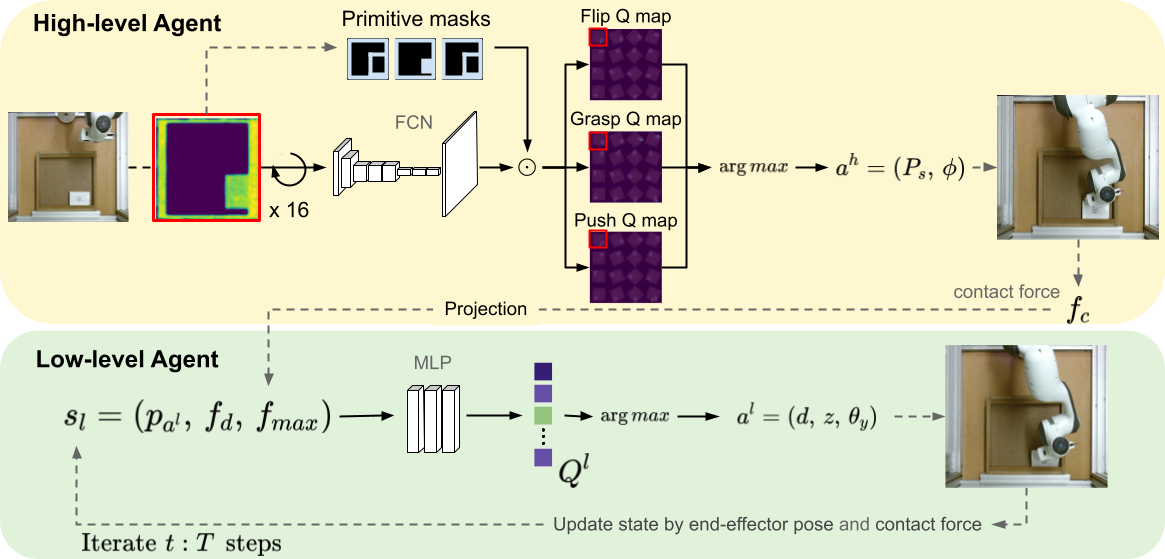

Our ED-PMP method aims to break down complex tasks into sub-tasks and reduces the need for manual primitive design. It comprises high-level and low-level agents. High-Level Agent (top): The high-level agent takes a height map as input to a DQN, implemented using an FCN model. It then outputs pixel-wise maps of Q values, where each pixel corresponds to a starting pose and a primitive. Low-Level Agent (down): The low-level agent combines the current end-effector pose and contact force as the state of a DQN model. It iteratively estimates a series of actions to accomplish the sub-task within a designated number of iterations, denoted as T.